Thank you for your interest in this post! We’re undergoing a rebranding process, so please excuse us if some names are out of date.

Written by Teemu Paivinen

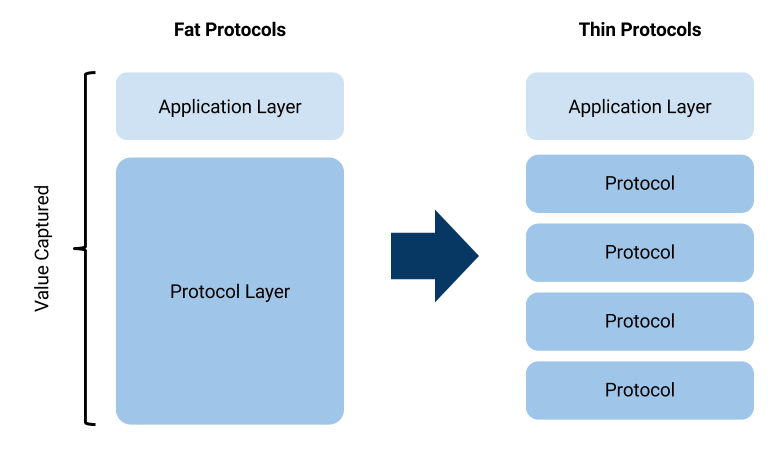

In 2016 Joel Monegro wrote a blogpost titled “Fat Protocols”. In his blogpost he argues that while the previous internet stack resulted in most of the wealth being captured at the application level (Facebook, Amazon, etc), the blockchain stack will see most of the wealth captured on the protocol level (Ethereum, Bitcoin, etc).

I believe Joel’s thesis to be correct, in that protocols in aggregate will capture much of the value from the application layer. In the blockchain technology stack the application layer mainly performs the role of UI for the users, while various protocols handle much of the underlying functionality and thus capture most of the value.

However, I’d like to propose that while these protocols in aggregate will continue to capture most of the value, individual protocols will in fact be quite thin and tend towards capturing minimal value, due to the combined effects of forking and competitive market forces.

Always Be Forking

Blockchain protocols are very unique in that they can be forked. This means that if you don’t like how a protocol works, you can copy it and create your own iteration. This makes it very easy to create a protocol to suit exactly your needs or just create an identical protocol to avoid problematic updates to the original. No one needs to rely on a generic solution someone else has created. We’ve already seen this begin to happen with the Bitcoin Cash and Ethereum Classic forks.

Forking, then, allows the creation of a large amount of competitive protocols. This is great not only for innovation, as many slightly differentiated permutations of a protocol are tested in parallel, but also to maximise efficiency. In addition to forking, developers can of course also build competitive protocols in general with improved technology or better economics.

The incentive of every developer building blockchain technology is to have the best tools for the application they are building and to provide their service at the lowest possible cost, because if they don’t someone will just fork it or build a competitive protocol. A generic protocol (broad use-case) is unlikely to provide the best possible technology and incentives for every use-case in the long-term, so there is an incentive to compete.

So, if a protocol is “fat” and capturing a disproportionate amount of value, it’s likely ripe for a fork or a competitive protocol, where the new parallel protocol would be specialised to provide some portion of the functionality in a more efficient way. In this way, I believe “fatness” to actually be an indication of inefficiency and/or some other advantage.

The Great Thinning

Currently we are in a period where there are still marginal benefits to scale. The simplest example of this is Bitcoin, which is clearly the most widely accepted cryptocurrency as payment. Ethereum also currently benefits from scale as the ERC-20 token standard makes it easy for wallet providers to support any new launched token, in turn making fundraising for a new project easier.

The total market capitalisation of the industry is also still small enough, where the perceived big opportunities are mainly in the lowest level networks: networks you can build networks on top of (Ethereum, District0x, Neo, etc). As interchain interoperability is improved with solutions like Polkadot (interchain transfer), 0x (decentralised exchange) and ZeppelinOS (operating system & protocol marketplace), the marginal benefits of scale decrease. At the same time the industry is growing so the perceived opportunity in more niche areas grows. Right now a highly specialised protocol might look like a $50M opportunity, but in the future if the total market capitalisation of the industry were to be 10x higher, that same opportunity would be worth $500M.

As these forces push the industry towards more specialisation and forking allows almost unlimited competition, less the anti-competitive information and data advantages of the traditional technology industry, it would seem that protocols can only get thinner.

In the end I think the blockchain technology stack will actually look more like this:

The word “stack” may be slightly misleading as these protocols are not necessarily “on top” of each other, but can be.[/caption]

The word “stack” may be slightly misleading as these protocols are not necessarily “on top” of each other, but can be.[/caption]

I use the word “stack” here slightly differently than usual. A normal stack refers to the layers of technologies in a traditional application: back-end, middle layer and front-end. Here it refers to the various functionality that an application may wish to utilise such as governance, file storage, processing power, etc. All these functional protocols would also be interchangeable:

To look at these market forces from a different perspective: in the traditional economy it seems that the marginal benefits of scale are quite resilient. As the concept of forking does not exist and there is little transparency, effective competition becomes extremely difficult once a company has reached a certain scale.

A graph of these benefits against the perceived market opportunity (we can use market capitalisation as a proxy) in the traditional economy might look something like this:

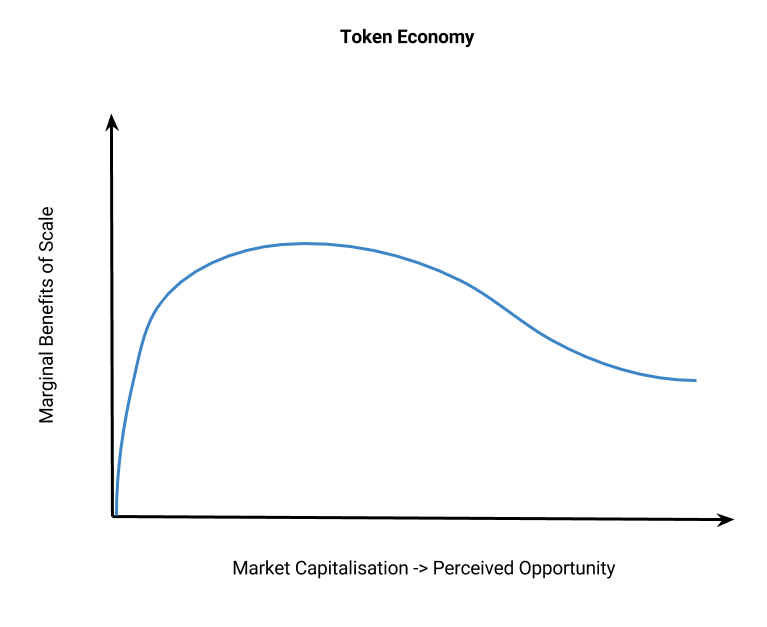

In the token economy the graph looks quite different, primarily due to the features described earlier in this post:

- Ease of forking

- Incentive to compete once perceived opportunity is large enough

- Improved interoperability and interchangeability of protocols

If the assumptions in this post hold true, the same graph for the token economy might look something like this:

Equilibrium

Of course there are also diminishing returns in specialisation, just as there are in scale. For example, there are usually benefits to having more than one developer working on a protocol. On the other hand, there are also benefits to not having too many developers working on a protocol.

From a code review standpoint, a large amount of developers auditing protocol code for security vulnerabilities is certainly an advantage. Here there aren’t necessarily any downsides to scale, but likely are simple enough codebases where smaller scale is acceptable from a security standpoint.

We can also look at network users or applications. There are certainly benefits to having more than one user/application on a network. On the other hand a lot of users/applications indicates a “fat” protocol and likely means the protocol has a large market capitalisation, creating an incentive for competition either by forking or specialised network creation, as long as the three primary features are valid (ease of forking, large perceived opportunity & interoperability of protocols).

There is then, I propose an equilibrium which the market will always tend towards but never reach, where the following is true:

- There are no marginal benefits to greater or smaller scale

- There are no perceived opportunities large enough to incentivise additional specialisation

- There is enough diversity in protocol permutations for there to be no incentive to fork

The best part of all this is that, assuming all I’ve proposed is true, the biggest winner is in fact the end-user. Cheaper, more specialised protocols and almost perfect competition are all in the favour of the end-user…and that’s a great thing.

I’d love to hear your feedback! Feel free to tweet me with any thoughts or ideas you may have regarding this post and thanks for reading.

Thanks to Santiago Palladino, Demian Brener, Francisco Giordano & Alejandro Santander from the Zeppelin team for giving me feedback during the writing of this post.